Now that is out of the way, here is what I did do:

- read some more papers on conservative visibility bounds

- implement basic visibility bounds

- Voxel Occlusion Testing: A Shadow Determination Accelerator for Ray Tracing - Woo A. & Amanatides J. (1990)

- A Survey of Visiblity for Walkthrough Applications - Cohen-Or D., Chrysanthou Y., Claudio T. S. (2000)

After reading these papers I implemented a basic lower and upper bound on the visibility. To detect clusters that are completely unoccluded (i.e. the points where a lower bound can be calculated) I used the shaft culling algorithm mention in my previous post. To verify whether a specific shaft is empty, I use a bounding volume hierarchy that is constructed during the preprocessing phase to quickly reject most primitives. The test is however conservative because it will only succeed if the entire bounding box is outside the shaft and no real primitives are tested against the shaft. Because testing a shaft is still quiet expensive, it is only used for lightcluster in the upper part of the hierarchy. In the results presented at the end of this post such test is only performed up to level 4 of the light tree which limits the number of shafts per lightcut to 31.

For detecting cluster that are completely occluded I currently use a very simple approach where one occlusion value (all lights are either occluded or not) is calculated for all cells in a regular grid. To estimate the occlusion value, a number of random rays are shot between both bounding boxes and tested for occlusion. In the results presented at the end, the scene is subdivided in 1 million cells which each use up to 1000 rays for the estimate. It is however important to notice that most cells only use a small fraction of these rays because once an unoccluded ray is found, the cell is categorized as not unoccluded. In the scene used less then 1% of maximum number of rays (1 billion) is actually tested.

To test this implementation I used one of the scenes used by Peter Shirley for testing radiosity. The scene consists of a room with a table with chairs lit by single area light source on the ceiling. The first and second pictures show the result of rendering this scene using regular lightcuts and lightcuts extended with the implementation described above and the following settings:

- resolution: 640x480

- samples/pixel: 4

- area light approx.: 1024 point lights

- render-machine: AMD X2 3800+ (single core used) with 1GB ram

- lightcuts rel. error: 2%

- max. cut size: 1000

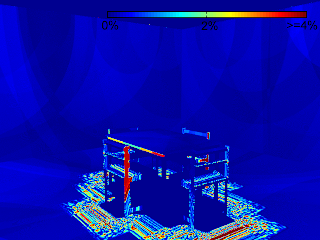

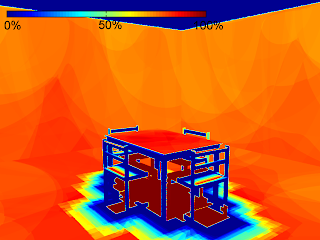

The following 2 images show the relative error (using a reference solution) for each pixel. The average relative error is 0.26% and 1.19% respectively. As expected using more tight bounds results in a relative error closer to the 2% target error.

The final image shows the relative reduction in cut size for each pixel. This reduction is largest in the occluded regions where the lightcuts algorithm uses the maximum cut allowed (i.e. 1000) and the extended implementation uses a minimal cut (i.e. 1). To verify that the upper bound on its own is not the major contributor to the improvement (i.e. making the lower bound useless in this scene) I rendered this scene using only a lower and only an upper bound on the visibility. The resulting cut sizes are 82 if only a lower bound on the visibility is used and 91 if only an upper bound on the visibility is used. The upper bound is however responsible for most of the reduction in render time.

No comments:

Post a Comment